|

Prusa MINI Firmware overview

|

|

Prusa MINI Firmware overview

|

Enumerations | |

| enum | pbuf_layer { PBUF_TRANSPORT, PBUF_IP, PBUF_LINK, PBUF_RAW_TX, PBUF_RAW } |

| enum | pbuf_type { PBUF_RAM, PBUF_ROM, PBUF_REF, PBUF_POOL } |

Functions | |

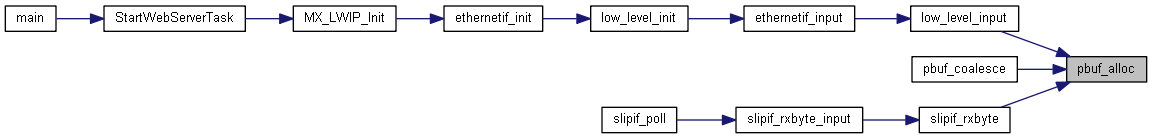

| struct pbuf * | pbuf_alloc (pbuf_layer layer, u16_t length, pbuf_type type) |

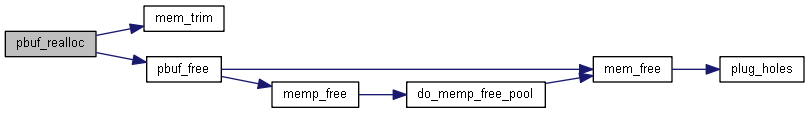

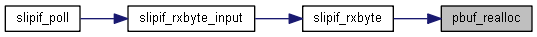

| void | pbuf_realloc (struct pbuf *p, u16_t new_len) |

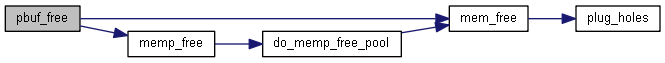

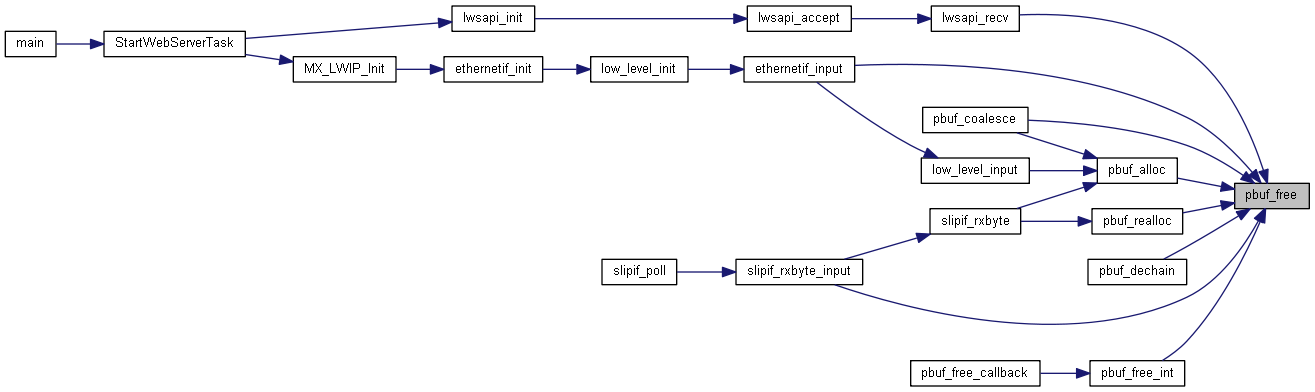

| u8_t | pbuf_free (struct pbuf *p) |

| void | pbuf_ref (struct pbuf *p) |

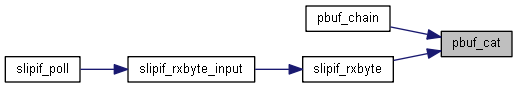

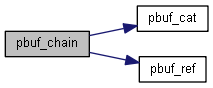

| void | pbuf_cat (struct pbuf *h, struct pbuf *t) |

| void | pbuf_chain (struct pbuf *h, struct pbuf *t) |

| err_t | pbuf_copy (struct pbuf *p_to, const struct pbuf *p_from) |

| u16_t | pbuf_copy_partial (const struct pbuf *buf, void *dataptr, u16_t len, u16_t offset) |

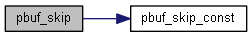

| struct pbuf * | pbuf_skip (struct pbuf *in, u16_t in_offset, u16_t *out_offset) |

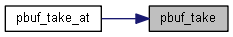

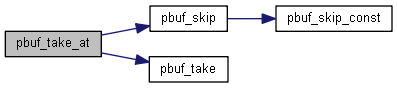

| err_t | pbuf_take (struct pbuf *buf, const void *dataptr, u16_t len) |

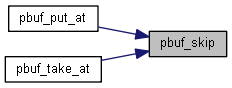

| err_t | pbuf_take_at (struct pbuf *buf, const void *dataptr, u16_t len, u16_t offset) |

| struct pbuf * | pbuf_coalesce (struct pbuf *p, pbuf_layer layer) |

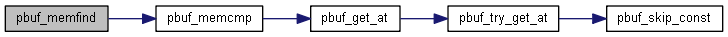

| u8_t | pbuf_get_at (const struct pbuf *p, u16_t offset) |

| int | pbuf_try_get_at (const struct pbuf *p, u16_t offset) |

| void | pbuf_put_at (struct pbuf *p, u16_t offset, u8_t data) |

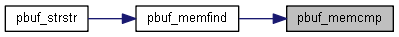

| u16_t | pbuf_memcmp (const struct pbuf *p, u16_t offset, const void *s2, u16_t n) |

| u16_t | pbuf_memfind (const struct pbuf *p, const void *mem, u16_t mem_len, u16_t start_offset) |

Packets are built from the pbuf data structure. It supports dynamic memory allocation for packet contents or can reference externally managed packet contents both in RAM and ROM. Quick allocation for incoming packets is provided through pools with fixed sized pbufs.

A packet may span over multiple pbufs, chained as a singly linked list. This is called a "pbuf chain".

Multiple packets may be queued, also using this singly linked list. This is called a "packet queue".

So, a packet queue consists of one or more pbuf chains, each of which consist of one or more pbufs. CURRENTLY, PACKET QUEUES ARE NOT SUPPORTED!!! Use helper structs to queue multiple packets.

The differences between a pbuf chain and a packet queue are very precise but subtle.

The last pbuf of a packet has a ->tot_len field that equals the ->len field. It can be found by traversing the list. If the last pbuf of a packet has a ->next field other than NULL, more packets are on the queue.

Therefore, looping through a pbuf of a single packet, has an loop end condition (tot_len == p->len), NOT (next == NULL).

Example of custom pbuf usage for zero-copy RX:

| enum pbuf_layer |

Enumeration of pbuf layers

| Enumerator | |

|---|---|

| PBUF_TRANSPORT | Includes spare room for transport layer header, e.g. UDP header. Use this if you intend to pass the pbuf to functions like udp_send(). |

| PBUF_IP | Includes spare room for IP header. Use this if you intend to pass the pbuf to functions like raw_send(). |

| PBUF_LINK | Includes spare room for link layer header (ethernet header). Use this if you intend to pass the pbuf to functions like ethernet_output().

|

| PBUF_RAW_TX | Includes spare room for additional encapsulation header before ethernet headers (e.g. 802.11). Use this if you intend to pass the pbuf to functions like netif->linkoutput().

|

| PBUF_RAW | Use this for input packets in a netif driver when calling netif->input() in the most common case - ethernet-layer netif driver. |

| enum pbuf_type |

Enumeration of pbuf types

| Enumerator | |

|---|---|

| PBUF_RAM | pbuf data is stored in RAM, used for TX mostly, struct pbuf and its payload are allocated in one piece of contiguous memory (so the first payload byte can be calculated from struct pbuf). pbuf_alloc() allocates PBUF_RAM pbufs as unchained pbufs (although that might change in future versions). This should be used for all OUTGOING packets (TX). |

| PBUF_ROM | pbuf data is stored in ROM, i.e. struct pbuf and its payload are located in totally different memory areas. Since it points to ROM, payload does not have to be copied when queued for transmission. |

| PBUF_REF | pbuf comes from the pbuf pool. Much like PBUF_ROM but payload might change so it has to be duplicated when queued before transmitting, depending on who has a 'ref' to it. |

| PBUF_POOL | pbuf payload refers to RAM. This one comes from a pool and should be used for RX. Payload can be chained (scatter-gather RX) but like PBUF_RAM, struct pbuf and its payload are allocated in one piece of contiguous memory (so the first payload byte can be calculated from struct pbuf). Don't use this for TX, if the pool becomes empty e.g. because of TCP queuing, you are unable to receive TCP acks! |

| struct pbuf* pbuf_alloc | ( | pbuf_layer | layer, |

| u16_t | length, | ||

| pbuf_type | type | ||

| ) |

Allocates a pbuf of the given type (possibly a chain for PBUF_POOL type).

The actual memory allocated for the pbuf is determined by the layer at which the pbuf is allocated and the requested size (from the size parameter).

| layer | flag to define header size |

| length | size of the pbuf's payload |

| type | this parameter decides how and where the pbuf should be allocated as follows: |

Shrink a pbuf chain to a desired length.

| p | pbuf to shrink. |

| new_len | desired new length of pbuf chain |

Depending on the desired length, the first few pbufs in a chain might be skipped and left unchanged. The new last pbuf in the chain will be resized, and any remaining pbufs will be freed.

Dereference a pbuf chain or queue and deallocate any no-longer-used pbufs at the head of this chain or queue.

Decrements the pbuf reference count. If it reaches zero, the pbuf is deallocated.

For a pbuf chain, this is repeated for each pbuf in the chain, up to the first pbuf which has a non-zero reference count after decrementing. So, when all reference counts are one, the whole chain is free'd.

| p | The pbuf (chain) to be dereferenced. |

Increment the reference count of the pbuf.

| p | pbuf to increase reference counter of |

Concatenate two pbufs (each may be a pbuf chain) and take over the caller's reference of the tail pbuf.

Chain two pbufs (or pbuf chains) together.

The caller MUST call pbuf_free(t) once it has stopped using it. Use pbuf_cat() instead if you no longer use t.

| h | head pbuf (chain) |

| t | tail pbuf (chain) |

The ->tot_len fields of all pbufs of the head chain are adjusted. The ->next field of the last pbuf of the head chain is adjusted. The ->ref field of the first pbuf of the tail chain is adjusted.

Create PBUF_RAM copies of pbufs.

Used to queue packets on behalf of the lwIP stack, such as ARP based queueing.

| p_to | pbuf destination of the copy |

| p_from | pbuf source of the copy |

Copy (part of) the contents of a packet buffer to an application supplied buffer.

| buf | the pbuf from which to copy data |

| dataptr | the application supplied buffer |

| len | length of data to copy (dataptr must be big enough). No more than buf->tot_len will be copied, irrespective of len |

| offset | offset into the packet buffer from where to begin copying len bytes |

Skip a number of bytes at the start of a pbuf

| in | input pbuf |

| in_offset | offset to skip |

| out_offset | resulting offset in the returned pbuf |

Copy application supplied data into a pbuf. This function can only be used to copy the equivalent of buf->tot_len data.

| buf | pbuf to fill with data |

| dataptr | application supplied data buffer |

| len | length of the application supplied data buffer |

Same as pbuf_take() but puts data at an offset

| buf | pbuf to fill with data |

| dataptr | application supplied data buffer |

| len | length of the application supplied data buffer |

| offset | offset in pbuf where to copy dataptr to |

| struct pbuf* pbuf_coalesce | ( | struct pbuf * | p, |

| pbuf_layer | layer | ||

| ) |

Creates a single pbuf out of a queue of pbufs.

| p | the source pbuf |

| layer | pbuf_layer of the new pbuf |

Get one byte from the specified position in a pbuf WARNING: returns zero for offset >= p->tot_len

| p | pbuf to parse |

| offset | offset into p of the byte to return |

Get one byte from the specified position in a pbuf

| p | pbuf to parse |

| offset | offset into p of the byte to return |

Put one byte to the specified position in a pbuf WARNING: silently ignores offset >= p->tot_len

| p | pbuf to fill |

| offset | offset into p of the byte to write |

| data | byte to write at an offset into p |

Compare pbuf contents at specified offset with memory s2, both of length n

| p | pbuf to compare |

| offset | offset into p at which to start comparing |

| s2 | buffer to compare |

| n | length of buffer to compare |

Find occurrence of mem (with length mem_len) in pbuf p, starting at offset start_offset.

| p | pbuf to search, maximum length is 0xFFFE since 0xFFFF is used as return value 'not found' |

| mem | search for the contents of this buffer |

| mem_len | length of 'mem' |

| start_offset | offset into p at which to start searching |