|

Prusa MINI Firmware overview

|

|

Prusa MINI Firmware overview

|

#include "lwip/opt.h"#include "lwip/mem.h"#include "lwip/def.h"#include "lwip/sys.h"#include "lwip/stats.h"#include "lwip/err.h"#include <string.h>Classes | |

| struct | mem |

Macros | |

| #define | MIN_SIZE 12 |

| #define | MIN_SIZE_ALIGNED LWIP_MEM_ALIGN_SIZE(MIN_SIZE) |

| #define | SIZEOF_STRUCT_MEM LWIP_MEM_ALIGN_SIZE(sizeof(struct mem)) |

| #define | MEM_SIZE_ALIGNED LWIP_MEM_ALIGN_SIZE(MEM_SIZE) |

| #define | LWIP_RAM_HEAP_POINTER ram_heap |

| #define | LWIP_MEM_FREE_DECL_PROTECT() |

| #define | LWIP_MEM_FREE_PROTECT() sys_mutex_lock(&mem_mutex) |

| #define | LWIP_MEM_FREE_UNPROTECT() sys_mutex_unlock(&mem_mutex) |

| #define | LWIP_MEM_ALLOC_DECL_PROTECT() |

| #define | LWIP_MEM_ALLOC_PROTECT() |

| #define | LWIP_MEM_ALLOC_UNPROTECT() |

Functions | |

| LWIP_DECLARE_MEMORY_ALIGNED (ram_heap, MEM_SIZE_ALIGNED+(2U *SIZEOF_STRUCT_MEM)) | |

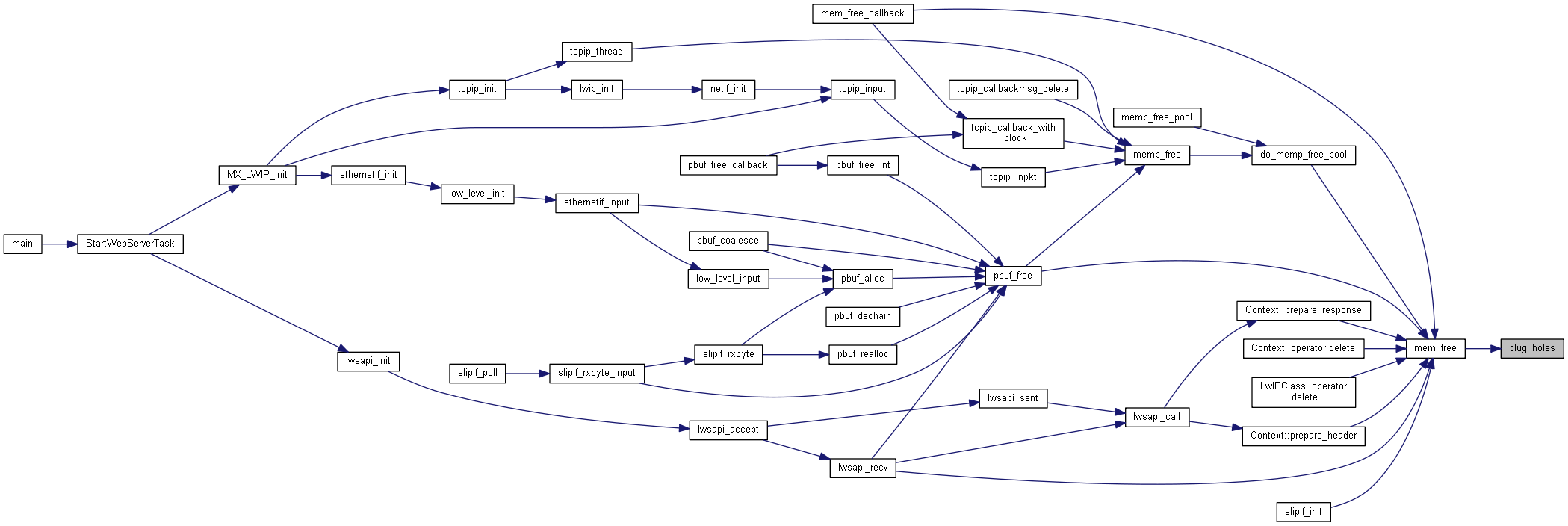

| static void | plug_holes (struct mem *mem) |

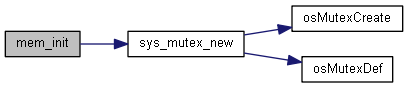

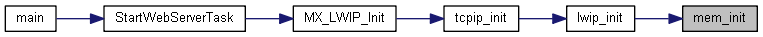

| void | mem_init (void) |

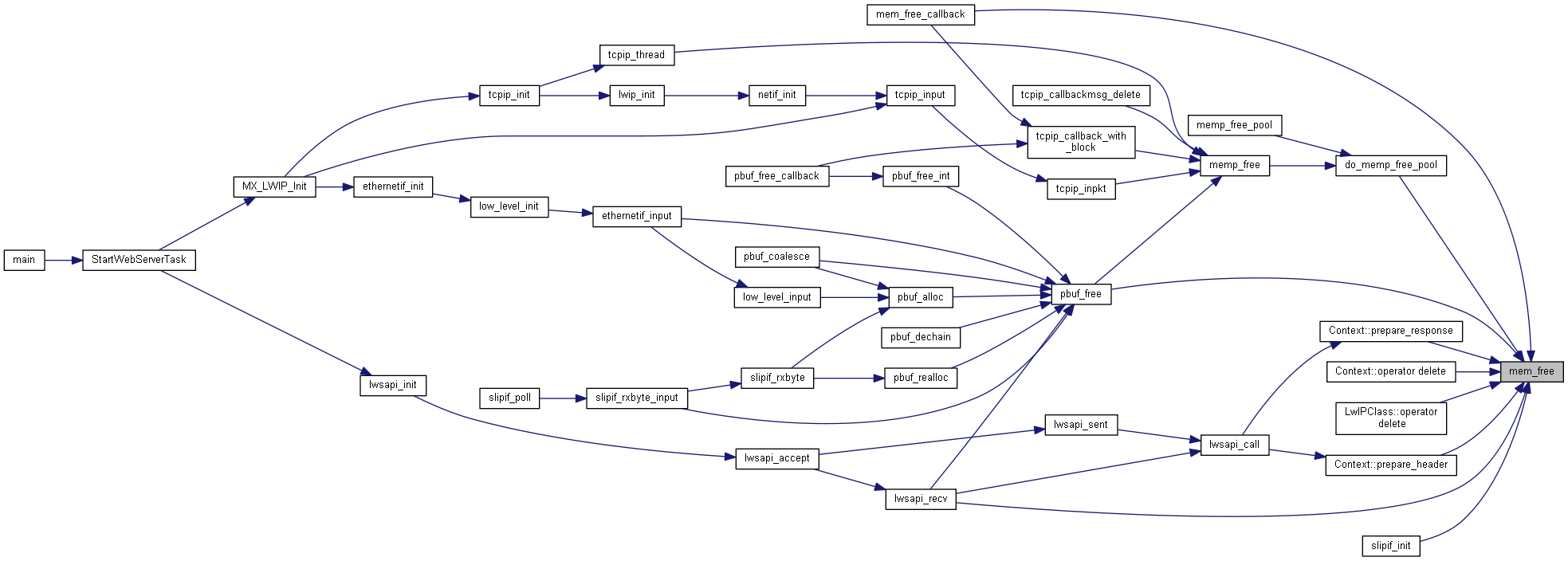

| void | mem_free (void *rmem) |

| void * | mem_trim (void *rmem, mem_size_t newsize) |

| void * | mem_malloc (mem_size_t size) |

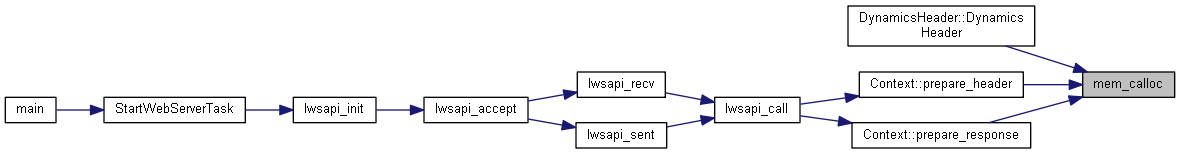

| void * | mem_calloc (mem_size_t count, mem_size_t size) |

Variables | |

| static u8_t * | ram |

| static struct mem * | ram_end |

| static struct mem * | lfree |

| static sys_mutex_t | mem_mutex |

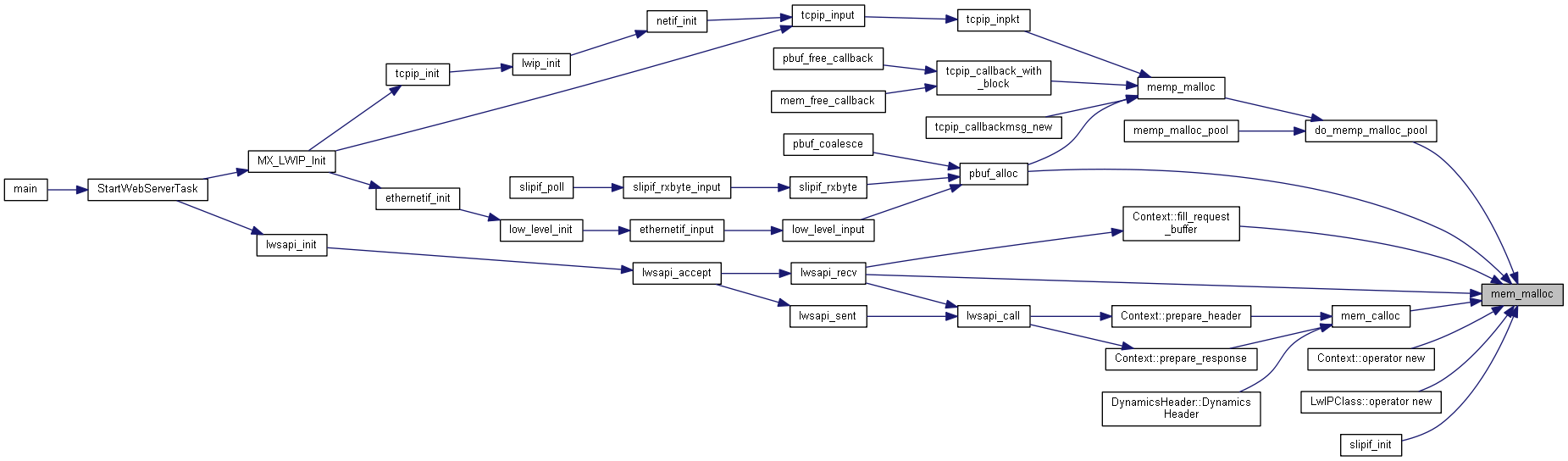

Dynamic memory manager

This is a lightweight replacement for the standard C library malloc().

If you want to use the standard C library malloc() instead, define MEM_LIBC_MALLOC to 1 in your lwipopts.h

To let mem_malloc() use pools (prevents fragmentation and is much faster than a heap but might waste some memory), define MEM_USE_POOLS to 1, define MEMP_USE_CUSTOM_POOLS to 1 and create a file "lwippools.h" that includes a list of pools like this (more pools can be added between _START and _END):

Define three pools with sizes 256, 512, and 1512 bytes LWIP_MALLOC_MEMPOOL_START LWIP_MALLOC_MEMPOOL(20, 256) LWIP_MALLOC_MEMPOOL(10, 512) LWIP_MALLOC_MEMPOOL(5, 1512) LWIP_MALLOC_MEMPOOL_END

| #define MIN_SIZE 12 |

All allocated blocks will be MIN_SIZE bytes big, at least! MIN_SIZE can be overridden to suit your needs. Smaller values save space, larger values could prevent too small blocks to fragment the RAM too much.

| #define MIN_SIZE_ALIGNED LWIP_MEM_ALIGN_SIZE(MIN_SIZE) |

| #define SIZEOF_STRUCT_MEM LWIP_MEM_ALIGN_SIZE(sizeof(struct mem)) |

| #define MEM_SIZE_ALIGNED LWIP_MEM_ALIGN_SIZE(MEM_SIZE) |

| #define LWIP_RAM_HEAP_POINTER ram_heap |

| #define LWIP_MEM_FREE_DECL_PROTECT | ( | ) |

| #define LWIP_MEM_FREE_PROTECT | ( | ) | sys_mutex_lock(&mem_mutex) |

| #define LWIP_MEM_FREE_UNPROTECT | ( | ) | sys_mutex_unlock(&mem_mutex) |

| #define LWIP_MEM_ALLOC_DECL_PROTECT | ( | ) |

| #define LWIP_MEM_ALLOC_PROTECT | ( | ) |

| #define LWIP_MEM_ALLOC_UNPROTECT | ( | ) |

| LWIP_DECLARE_MEMORY_ALIGNED | ( | ram_heap | , |

| MEM_SIZE_ALIGNED+ | 2U *SIZEOF_STRUCT_MEM | ||

| ) |

If you want to relocate the heap to external memory, simply define LWIP_RAM_HEAP_POINTER as a void-pointer to that location. If so, make sure the memory at that location is big enough (see below on how that space is calculated). the heap. we need one struct mem at the end and some room for alignment

"Plug holes" by combining adjacent empty struct mems. After this function is through, there should not exist one empty struct mem pointing to another empty struct mem.

| mem | this points to a struct mem which just has been freed |

Zero the heap and initialize start, end and lowest-free

Put a struct mem back on the heap

| rmem | is the data portion of a struct mem as returned by a previous call to mem_malloc() |

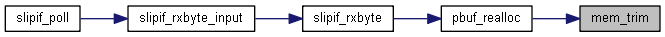

| void* mem_trim | ( | void * | rmem, |

| mem_size_t | newsize | ||

| ) |

Shrink memory returned by mem_malloc().

| rmem | pointer to memory allocated by mem_malloc the is to be shrinked |

| newsize | required size after shrinking (needs to be smaller than or equal to the previous size) |

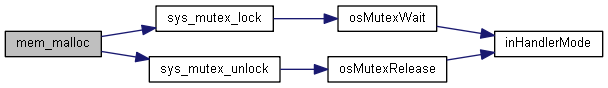

| void* mem_malloc | ( | mem_size_t | size | ) |

Allocate a block of memory with a minimum of 'size' bytes.

| size | is the minimum size of the requested block in bytes. |

Note that the returned value will always be aligned (as defined by MEM_ALIGNMENT).

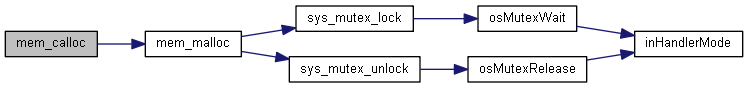

| void* mem_calloc | ( | mem_size_t | count, |

| mem_size_t | size | ||

| ) |

Contiguously allocates enough space for count objects that are size bytes of memory each and returns a pointer to the allocated memory.

The allocated memory is filled with bytes of value zero.

| count | number of objects to allocate |

| size | size of the objects to allocate |

|

static |

pointer to the heap (ram_heap): for alignment, ram is now a pointer instead of an array

|

static |

the last entry, always unused!

|

static |

pointer to the lowest free block, this is used for faster search

|

static |

concurrent access protection