|

Prusa MINI Firmware overview

|

#include <stdlib.h>

#include <string.h>

#include "FreeRTOS.h"

#include "task.h"

#include "queue.h"

|

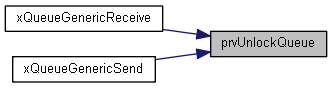

| static PRIVILEGED_FUNCTION void | prvUnlockQueue (Queue_t *const pxQueue) |

| |

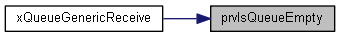

| static PRIVILEGED_FUNCTION BaseType_t | prvIsQueueEmpty (const Queue_t *pxQueue) |

| |

| static PRIVILEGED_FUNCTION BaseType_t | prvIsQueueFull (const Queue_t *pxQueue) |

| |

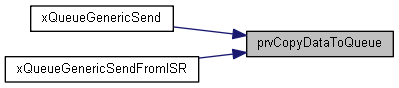

| static PRIVILEGED_FUNCTION BaseType_t | prvCopyDataToQueue (Queue_t *const pxQueue, const void *pvItemToQueue, const BaseType_t xPosition) |

| |

| static PRIVILEGED_FUNCTION void | prvCopyDataFromQueue (Queue_t *const pxQueue, void *const pvBuffer) |

| |

| static PRIVILEGED_FUNCTION void | prvInitialiseNewQueue (const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, uint8_t *pucQueueStorage, const uint8_t ucQueueType, Queue_t *pxNewQueue) |

| |

| BaseType_t | xQueueGenericReset (QueueHandle_t xQueue, BaseType_t xNewQueue) |

| |

| BaseType_t | xQueueGenericSend (QueueHandle_t xQueue, const void *const pvItemToQueue, TickType_t xTicksToWait, const BaseType_t xCopyPosition) |

| |

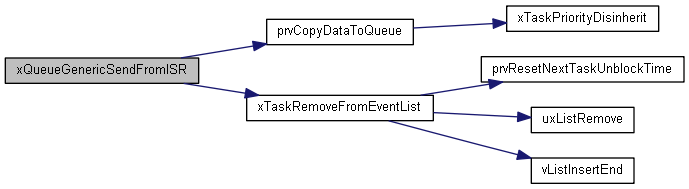

| BaseType_t | xQueueGenericSendFromISR (QueueHandle_t xQueue, const void *const pvItemToQueue, BaseType_t *const pxHigherPriorityTaskWoken, const BaseType_t xCopyPosition) |

| |

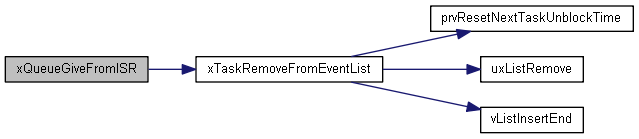

| BaseType_t | xQueueGiveFromISR (QueueHandle_t xQueue, BaseType_t *const pxHigherPriorityTaskWoken) |

| |

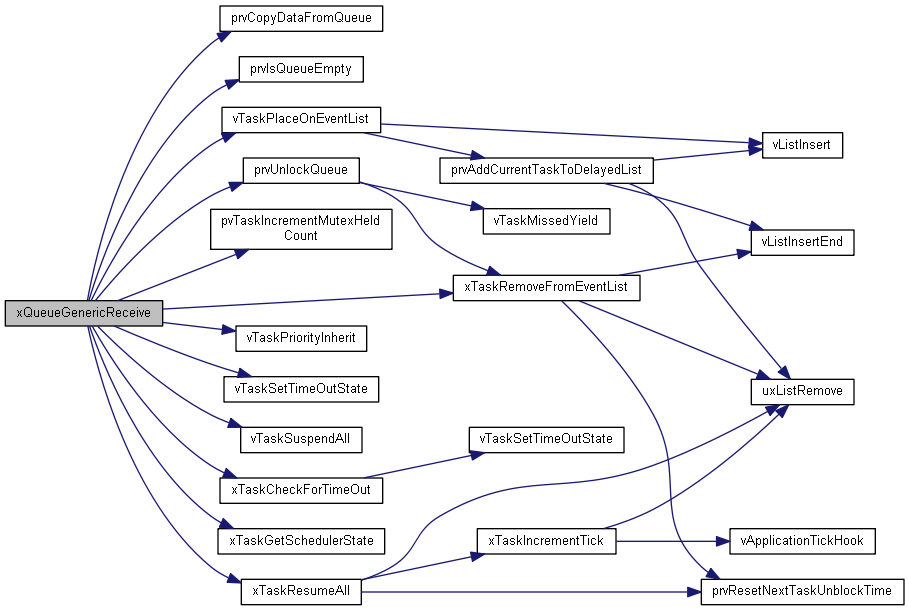

| BaseType_t | xQueueGenericReceive (QueueHandle_t xQueue, void *const pvBuffer, TickType_t xTicksToWait, const BaseType_t xJustPeeking) |

| |

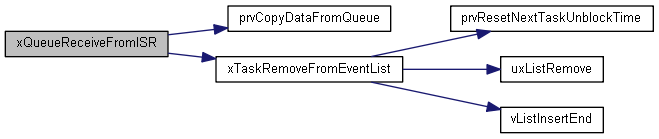

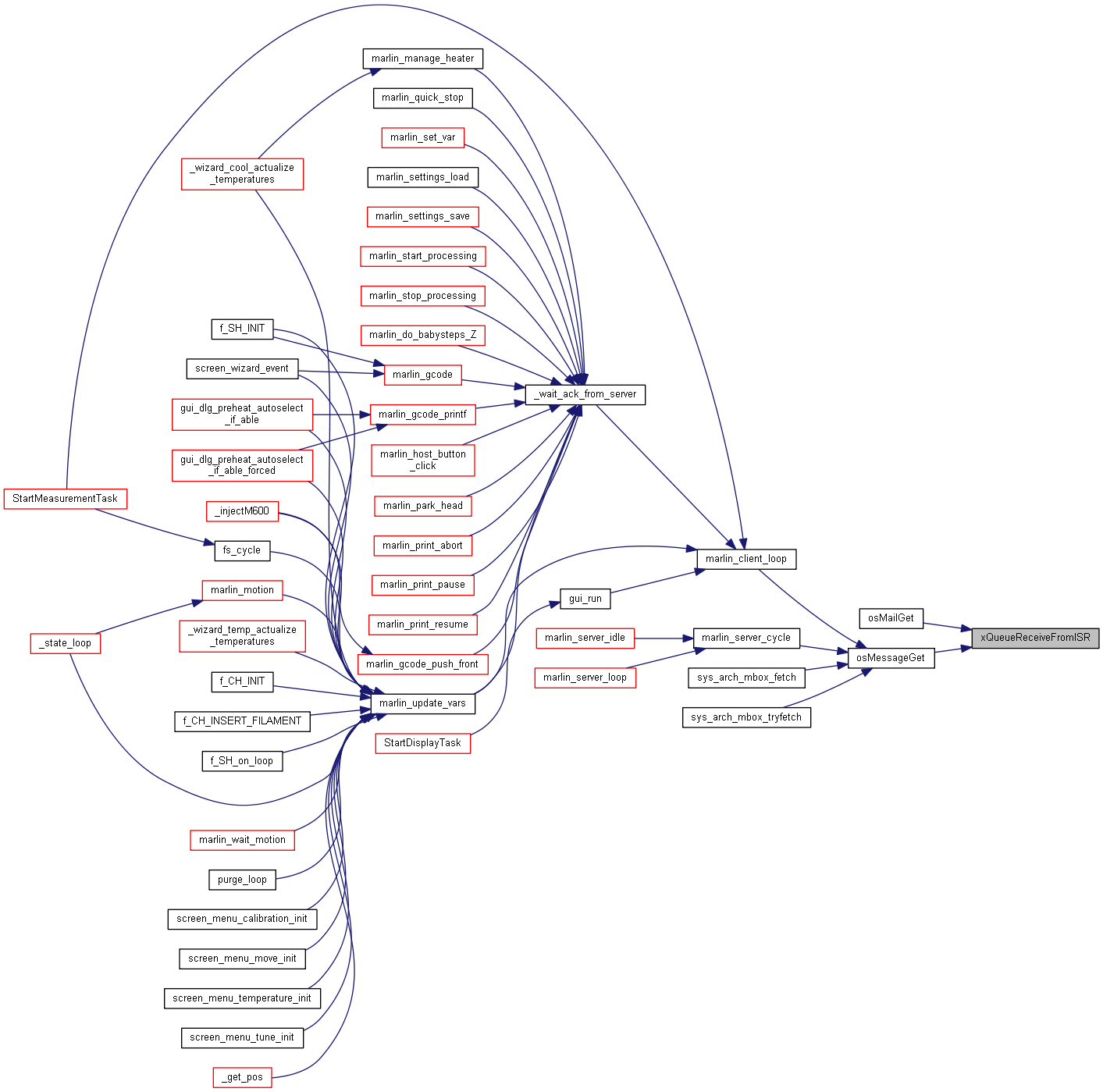

| BaseType_t | xQueueReceiveFromISR (QueueHandle_t xQueue, void *const pvBuffer, BaseType_t *const pxHigherPriorityTaskWoken) |

| |

| BaseType_t | xQueuePeekFromISR (QueueHandle_t xQueue, void *const pvBuffer) |

| |

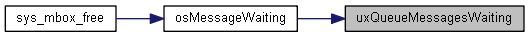

| UBaseType_t | uxQueueMessagesWaiting (const QueueHandle_t xQueue) |

| |

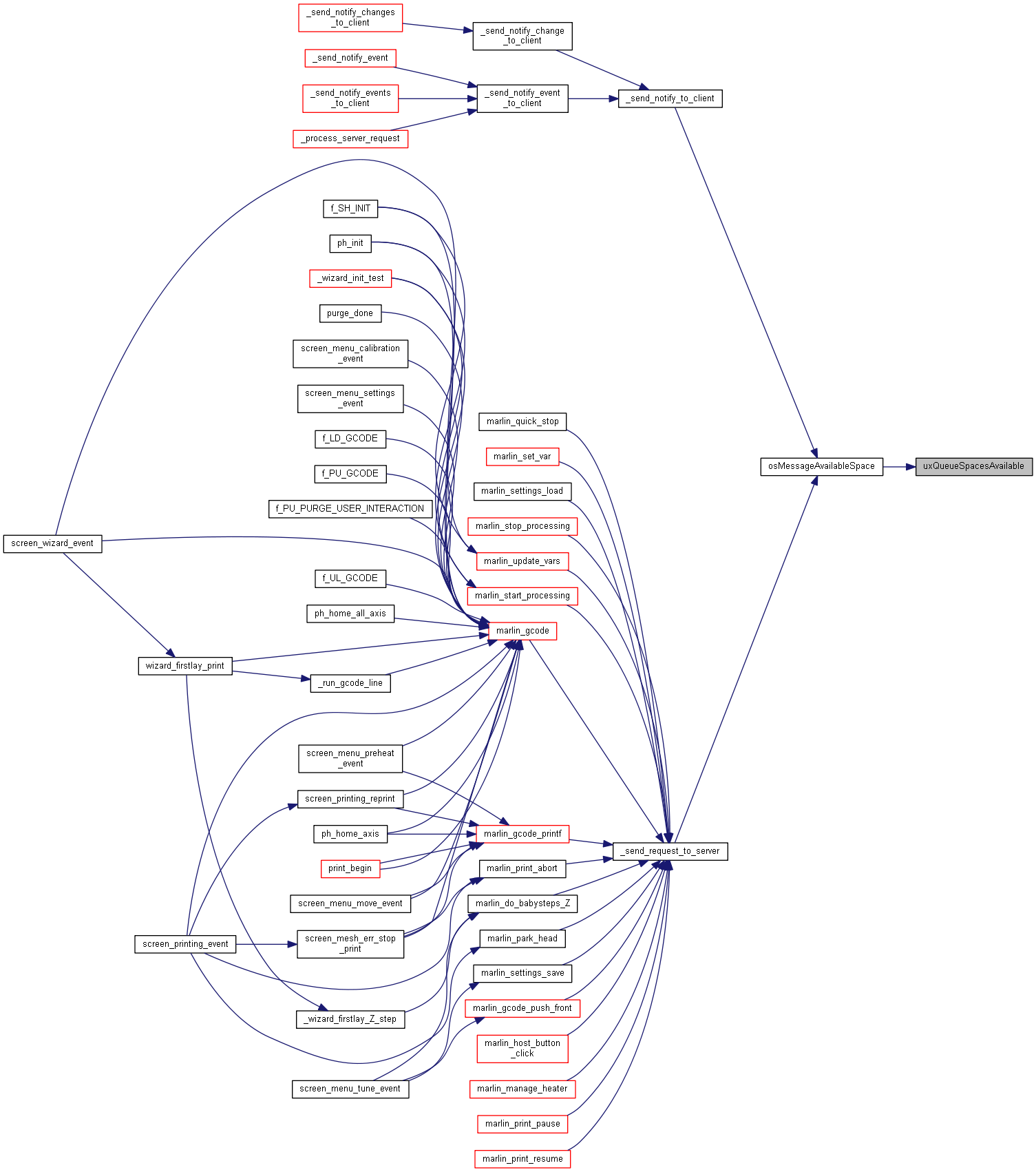

| UBaseType_t | uxQueueSpacesAvailable (const QueueHandle_t xQueue) |

| |

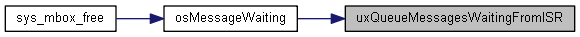

| UBaseType_t | uxQueueMessagesWaitingFromISR (const QueueHandle_t xQueue) |

| |

| void | vQueueDelete (QueueHandle_t xQueue) |

| |

| BaseType_t | xQueueIsQueueEmptyFromISR (const QueueHandle_t xQueue) |

| |

| BaseType_t | xQueueIsQueueFullFromISR (const QueueHandle_t xQueue) |

| |

◆ MPU_WRAPPERS_INCLUDED_FROM_API_FILE

| #define MPU_WRAPPERS_INCLUDED_FROM_API_FILE |

◆ queueUNLOCKED

| #define queueUNLOCKED ( ( int8_t ) -1 ) |

◆ queueLOCKED_UNMODIFIED

| #define queueLOCKED_UNMODIFIED ( ( int8_t ) 0 ) |

◆ pxMutexHolder

| #define pxMutexHolder pcTail |

◆ uxQueueType

| #define uxQueueType pcHead |

◆ queueQUEUE_IS_MUTEX

| #define queueQUEUE_IS_MUTEX NULL |

◆ queueSEMAPHORE_QUEUE_ITEM_LENGTH

| #define queueSEMAPHORE_QUEUE_ITEM_LENGTH ( ( UBaseType_t ) 0 ) |

◆ queueMUTEX_GIVE_BLOCK_TIME

| #define queueMUTEX_GIVE_BLOCK_TIME ( ( TickType_t ) 0U ) |

◆ queueYIELD_IF_USING_PREEMPTION

| #define queueYIELD_IF_USING_PREEMPTION |

( |

| ) |

|

◆ prvLockQueue

| #define prvLockQueue |

( |

|

pxQueue | ) |

|

Value:

{ \

{ \

} \

{ \

} \

} \

taskEXIT_CRITICAL()

◆ xQUEUE

◆ Queue_t

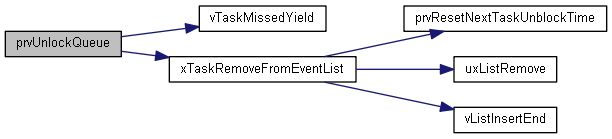

◆ prvUnlockQueue()

1804 int8_t cTxLock = pxQueue->

cTxLock;

1811 #if ( configUSE_QUEUE_SETS == 1 )

1813 if( pxQueue->pxQueueSetContainer !=

NULL )

1885 int8_t cRxLock = pxQueue->

cRxLock;

◆ prvIsQueueEmpty()

◆ prvIsQueueFull()

◆ prvCopyDataToQueue()

1708 #if ( configUSE_MUTEXES == 1 )

1714 pxQueue->pxMutexHolder =

NULL;

1757 --uxMessagesWaiting;

◆ prvCopyDataFromQueue()

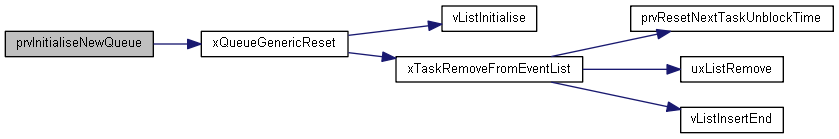

◆ prvInitialiseNewQueue()

436 (

void ) ucQueueType;

444 pxNewQueue->

pcHead = ( int8_t * ) pxNewQueue;

449 pxNewQueue->

pcHead = ( int8_t * ) pucQueueStorage;

454 pxNewQueue->

uxLength = uxQueueLength;

458 #if ( configUSE_TRACE_FACILITY == 1 )

460 pxNewQueue->ucQueueType = ucQueueType;

464 #if( configUSE_QUEUE_SETS == 1 )

466 pxNewQueue->pxQueueSetContainer =

NULL;

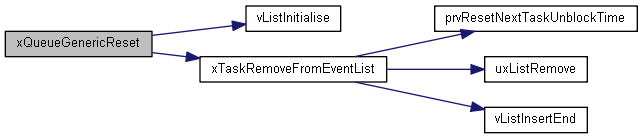

◆ xQueueGenericReset()

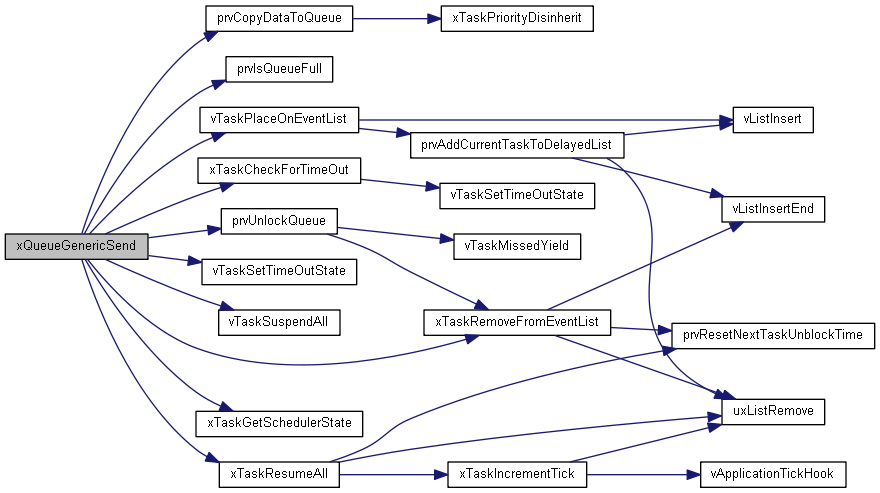

◆ xQueueGenericSend()

732 #if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

755 #if ( configUSE_QUEUE_SETS == 1 )

757 if( pxQueue->pxQueueSetContainer !=

NULL )

759 if( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) !=

pdFALSE )

790 else if( xYieldRequired !=

pdFALSE )

823 else if( xYieldRequired !=

pdFALSE )

854 else if( xEntryTimeSet ==

pdFALSE )

◆ xQueueGenericSendFromISR()

956 const int8_t cTxLock = pxQueue->

cTxLock;

971 #if ( configUSE_QUEUE_SETS == 1 )

973 if( pxQueue->pxQueueSetContainer !=

NULL )

975 if( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) !=

pdFALSE )

980 if( pxHigherPriorityTaskWoken !=

NULL )

982 *pxHigherPriorityTaskWoken =

pdTRUE;

1002 if( pxHigherPriorityTaskWoken !=

NULL )

1004 *pxHigherPriorityTaskWoken =

pdTRUE;

1030 if( pxHigherPriorityTaskWoken !=

NULL )

1032 *pxHigherPriorityTaskWoken =

pdTRUE;

1055 pxQueue->

cTxLock = ( int8_t ) ( cTxLock + 1 );

◆ xQueueGiveFromISR()

1118 if( uxMessagesWaiting < pxQueue->uxLength )

1120 const int8_t cTxLock = pxQueue->

cTxLock;

1136 #if ( configUSE_QUEUE_SETS == 1 )

1138 if( pxQueue->pxQueueSetContainer !=

NULL )

1145 if( pxHigherPriorityTaskWoken !=

NULL )

1147 *pxHigherPriorityTaskWoken =

pdTRUE;

1167 if( pxHigherPriorityTaskWoken !=

NULL )

1169 *pxHigherPriorityTaskWoken =

pdTRUE;

1195 if( pxHigherPriorityTaskWoken !=

NULL )

1197 *pxHigherPriorityTaskWoken =

pdTRUE;

1220 pxQueue->

cTxLock = ( int8_t ) ( cTxLock + 1 );

◆ xQueueGenericReceive()

1241 int8_t *pcOriginalReadPosition;

1246 #if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

1279 #if ( configUSE_MUTEXES == 1 )

1351 else if( xEntryTimeSet ==

pdFALSE )

1380 #if ( configUSE_MUTEXES == 1 )

◆ xQueueReceiveFromISR()

1466 const int8_t cRxLock = pxQueue->

cRxLock;

1485 if( pxHigherPriorityTaskWoken !=

NULL )

1487 *pxHigherPriorityTaskWoken =

pdTRUE;

1508 pxQueue->

cRxLock = ( int8_t ) ( cRxLock + 1 );

◆ xQueuePeekFromISR()

1529 int8_t *pcOriginalReadPosition;

◆ uxQueueMessagesWaiting()

1587 uxReturn = ( (

Queue_t * ) xQueue )->uxMessagesWaiting;

◆ uxQueueSpacesAvailable()

1600 pxQueue = (

Queue_t * ) xQueue;

◆ uxQueueMessagesWaitingFromISR()

1619 uxReturn = ( (

Queue_t * ) xQueue )->uxMessagesWaiting;

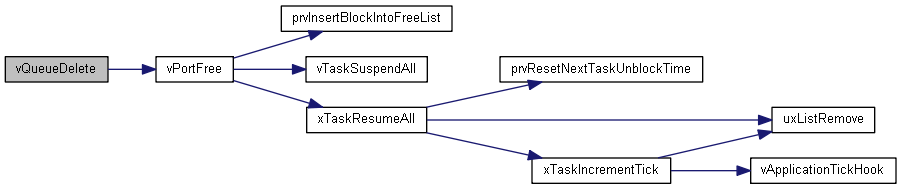

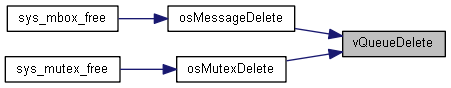

◆ vQueueDelete()

1632 #if ( configQUEUE_REGISTRY_SIZE > 0 )

1638 #if( ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) && ( configSUPPORT_STATIC_ALLOCATION == 0 ) )

1644 #elif( ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) && ( configSUPPORT_STATIC_ALLOCATION == 1 ) )

◆ xQueueIsQueueEmptyFromISR()

◆ xQueueIsQueueFullFromISR()

1979 if( ( (

Queue_t * ) xQueue )->uxMessagesWaiting == ( (

Queue_t * ) xQueue )->uxLength )

#define vQueueUnregisterQueue(xQueue)

Definition: FreeRTOS.h:325

static PRIVILEGED_FUNCTION void prvCopyDataFromQueue(Queue_t *const pxQueue, void *const pvBuffer)

Definition: queue.c:1776

#define traceQUEUE_RECEIVE_FROM_ISR_FAILED(pxQueue)

Definition: FreeRTOS.h:498

#define errQUEUE_FULL

Definition: projdefs.h:92

List_t xTasksWaitingToReceive

Definition: queue.c:143

#define queueOVERWRITE

Definition: queue.h:107

#define portSET_INTERRUPT_MASK_FROM_ISR()

Definition: FreeRTOS.h:300

PRIVILEGED_FUNCTION BaseType_t xTaskGetSchedulerState(void)

#define configASSERT(x)

Definition: FreeRTOSConfig.h:162

UBaseType_t uxItemSize

Definition: queue.c:147

PRIVILEGED_FUNCTION BaseType_t xTaskPriorityDisinherit(TaskHandle_t const pxMutexHolder)

#define queueYIELD_IF_USING_PREEMPTION()

Definition: queue.c:120

int8_t * pcReadFrom

Definition: queue.c:138

#define pdFAIL

Definition: projdefs.h:90

PRIVILEGED_FUNCTION void vTaskPriorityInherit(TaskHandle_t const pxMutexHolder)

volatile int8_t cTxLock

Definition: queue.c:150

#define traceQUEUE_RECEIVE(pxQueue)

Definition: FreeRTOS.h:470

#define queueLOCKED_UNMODIFIED

Definition: queue.c:95

PRIVILEGED_FUNCTION void vTaskMissedYield(void)

Definition: tasks.c:3076

PRIVILEGED_FUNCTION void vListInitialise(List_t *const pxList)

Definition: list.c:79

int8_t * pcWriteTo

Definition: queue.c:134

List_t xTasksWaitingToSend

Definition: queue.c:142

static PRIVILEGED_FUNCTION BaseType_t prvIsQueueFull(const Queue_t *pxQueue)

Definition: queue.c:1953

#define NULL

Definition: usbd_def.h:53

BaseType_t xQueueGenericReset(QueueHandle_t xQueue, BaseType_t xNewQueue)

Definition: queue.c:279

#define pdPASS

Definition: projdefs.h:89

uint32_t TickType_t

Definition: portmacro.h:105

#define queueUNLOCKED

Definition: queue.c:94

#define traceQUEUE_SEND_FROM_ISR_FAILED(pxQueue)

Definition: FreeRTOS.h:490

#define traceQUEUE_PEEK_FROM_ISR(pxQueue)

Definition: FreeRTOS.h:478

#define traceQUEUE_SEND_FAILED(pxQueue)

Definition: FreeRTOS.h:466

PRIVILEGED_FUNCTION BaseType_t xTaskRemoveFromEventList(const List_t *const pxEventList)

Definition: tasks.c:2894

#define taskENTER_CRITICAL()

Definition: task.h:217

PRIVILEGED_FUNCTION void vTaskPlaceOnEventList(List_t *const pxEventList, const TickType_t xTicksToWait)

Definition: tasks.c:2820

PRIVILEGED_FUNCTION void * pvTaskIncrementMutexHeldCount(void)

#define pdFALSE

Definition: projdefs.h:86

unsigned long UBaseType_t

Definition: portmacro.h:99

union QueueDefinition::@57 u

#define prvLockQueue(pxQueue)

Definition: queue.c:264

#define traceQUEUE_PEEK(pxQueue)

Definition: FreeRTOS.h:474

void

Definition: png.h:1083

#define traceQUEUE_PEEK_FROM_ISR_FAILED(pxQueue)

Definition: FreeRTOS.h:502

volatile int8_t cRxLock

Definition: queue.c:149

#define traceBLOCKING_ON_QUEUE_SEND(pxQueue)

Definition: FreeRTOS.h:404

static PRIVILEGED_FUNCTION BaseType_t prvIsQueueEmpty(const Queue_t *pxQueue)

Definition: queue.c:1914

#define listLIST_IS_EMPTY(pxList)

Definition: list.h:291

#define traceQUEUE_RECEIVE_FROM_ISR(pxQueue)

Definition: FreeRTOS.h:494

#define taskSCHEDULER_SUSPENDED

Definition: task.h:257

const uint8_t[]

Definition: 404_html.c:3

static PRIVILEGED_FUNCTION void prvUnlockQueue(Queue_t *const pxQueue)

Definition: queue.c:1794

#define traceQUEUE_SEND_FROM_ISR(pxQueue)

Definition: FreeRTOS.h:486

#define errQUEUE_EMPTY

Definition: projdefs.h:91

volatile UBaseType_t uxMessagesWaiting

Definition: queue.c:145

#define queueSEND_TO_BACK

Definition: queue.h:105

#define portASSERT_IF_INTERRUPT_PRIORITY_INVALID()

Definition: FreeRTOS.h:740

#define traceBLOCKING_ON_QUEUE_RECEIVE(pxQueue)

Definition: FreeRTOS.h:396

UBaseType_t uxLength

Definition: queue.c:146

#define portYIELD_WITHIN_API

Definition: FreeRTOS.h:692

#define traceQUEUE_SEND(pxQueue)

Definition: FreeRTOS.h:462

long BaseType_t

Definition: portmacro.h:98

#define pdTRUE

Definition: projdefs.h:87

int8_t * pcHead

Definition: queue.c:132

PRIVILEGED_FUNCTION BaseType_t xTaskResumeAll(void)

Definition: tasks.c:2017

#define traceQUEUE_RECEIVE_FAILED(pxQueue)

Definition: FreeRTOS.h:482

PRIVILEGED_FUNCTION void vPortFree(void *pv)

Definition: heap_4.c:305

#define traceQUEUE_DELETE(pxQueue)

Definition: FreeRTOS.h:506

#define taskEXIT_CRITICAL()

Definition: task.h:232

#define portCLEAR_INTERRUPT_MASK_FROM_ISR(uxSavedStatusValue)

Definition: FreeRTOS.h:304

#define traceQUEUE_CREATE(pxNewQueue)

Definition: FreeRTOS.h:422

int8_t * pcTail

Definition: queue.c:133

PRIVILEGED_FUNCTION void vTaskSuspendAll(void)

Definition: tasks.c:1944

PRIVILEGED_FUNCTION BaseType_t xTaskCheckForTimeOut(TimeOut_t *const pxTimeOut, TickType_t *const pxTicksToWait)

Definition: tasks.c:3015

static PRIVILEGED_FUNCTION BaseType_t prvCopyDataToQueue(Queue_t *const pxQueue, const void *pvItemToQueue, const BaseType_t xPosition)

Definition: queue.c:1697

#define queueQUEUE_IS_MUTEX

Definition: queue.c:110

#define mtCOVERAGE_TEST_MARKER()

Definition: FreeRTOS.h:748

PRIVILEGED_FUNCTION void vTaskSetTimeOutState(TimeOut_t *const pxTimeOut)

Definition: tasks.c:3007